Beowulf cluster: Difference between revisions

imported>Eric M Gearhart (What the HPCC/ESS project is) |

imported>Eric M Gearhart |

||

| Line 22: | Line 22: | ||

==Beowulf Development== | ==Beowulf Development== | ||

In early 1993, NASA scientists [[Donald Becker]] and [[Thomas Sterling]] began sketching out the details of what would become a revolutionary way to build a cheap supercomputer: link low-cost desktops together with commodity, off the shelf hardware and combine their performance.<ref name="The inside story of the Beowulf saga">{{cite web|url=http://www.gcn.com/print/24_8/35499-1.html|title="The inside story of the Beowulf saga"|date=Retreived 11-April-2007}}</ref> | In early 1993, NASA scientists [[Donald Becker]] and [[Thomas Sterling]] began sketching out the details of what would become a revolutionary way to build a cheap supercomputer: link low-cost desktops together with commodity, off the shelf (COTS) hardware and combine their performance.<ref name="The inside story of the Beowulf saga">{{cite web|url=http://www.gcn.com/print/24_8/35499-1.html|title="The inside story of the Beowulf saga"|date=Retreived 11-April-2007}}</ref> | ||

By 1994, under the sponsorship of the "High Performance Computing & Communications | |||

for Earth & Space Sciences" (HPCC/ESS)<ref name="HPCC/ESS">{{citeweb|url=http://www.lcp.nrl.navy.mil/hpcc-ess/|title=High Performance Computing & Communications for Earth & Space Sciences homepage"|date=Retreived 11-April-2007}}</ref> project, the Beowulf Parallel Workstation project at NASA's Goddard Space Flight Center had begun.<ref name="Becker Bio">{{cite web|url=http://www.beowulf.org/community/bio.html|title="Donald Becker's Bio at Beowulf.org"|date=Retreived 11-April-2007}}</ref><ref name="beowulf.org history">{{cite web|url=http://www.beowulf.org/overview/history.html|title="Beowulf History from beowulf.org"|date=Retreived 11-April-2007}}</ref> | for Earth & Space Sciences" (HPCC/ESS)<ref name="HPCC/ESS">{{citeweb|url=http://www.lcp.nrl.navy.mil/hpcc-ess/|title="High Performance Computing & Communications for Earth & Space Sciences homepage"|date=Retreived 11-April-2007}}</ref> project, the Beowulf Parallel Workstation project at NASA's Goddard Space Flight Center had begun.<ref name="Becker Bio">{{cite web|url=http://www.beowulf.org/community/bio.html|title="Donald Becker's Bio at Beowulf.org"|date=Retreived 11-April-2007}}</ref><ref name="beowulf.org history">{{cite web|url=http://www.beowulf.org/overview/history.html|title="Beowulf History from beowulf.org"|date=Retreived 11-April-2007}}</ref> | ||

==Popularity in High-Performance Computing== | |||

Today Beowulf systems are deployed worldwide as both as "cheap supercomputers" and as more traditional high-performance projects, chiefly in support of [[number crunching]] and [[scientific computing]]. | |||

It should be noted that more than 50 percent of the machines on the Top 500 List of supercomputers | |||

<ref name="Top 500">{{citeweb|url=http://top500.org|title="TOP500 Supercomputer Sites"|date=Retreived 11-April-2007}}</ref> are clusters of this sort. Many on the list are built on Becker and Sterling’s own specific architecture, the Beowulf cluster.<ref name="The inside story of the Beowulf saga"/> | |||

==Common Beowulf clusters== | ==Common Beowulf clusters== | ||

Revision as of 07:39, 11 April 2007

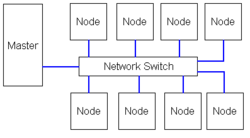

A Beowulf cluster is a group of personal computers linked together so that they act as one. This is known as parallel computing. This type of cluster is composed of a 'master' (which coordinates the processing power of the cluster) and usually many 'nodes' (computers that actually perform the calculations). The 'master' is typically a server, and "has more horsepower" than the individual nodes. The nodes in the cluster don't have to be identical, although to simplify deployment this is usually the case.

Usually the Beowulf 'nodes' are running Linux,[1] however this is not required, as both Mac OS X and FreeBSD clusters have been created.[2][3]

The nodes in a Beowulf cluster are networked into a small TCP/IP LAN, and have libraries and programs installed which allow processing to be shared among them.

The name Beowulf is derived from the main character in the Old English epic Beowulf.

Beowulf Development

In early 1993, NASA scientists Donald Becker and Thomas Sterling began sketching out the details of what would become a revolutionary way to build a cheap supercomputer: link low-cost desktops together with commodity, off the shelf (COTS) hardware and combine their performance.[4]

By 1994, under the sponsorship of the "High Performance Computing & Communications for Earth & Space Sciences" (HPCC/ESS)[5] project, the Beowulf Parallel Workstation project at NASA's Goddard Space Flight Center had begun.[6][7]

Popularity in High-Performance Computing

Today Beowulf systems are deployed worldwide as both as "cheap supercomputers" and as more traditional high-performance projects, chiefly in support of number crunching and scientific computing.

It should be noted that more than 50 percent of the machines on the Top 500 List of supercomputers [8] are clusters of this sort. Many on the list are built on Becker and Sterling’s own specific architecture, the Beowulf cluster.[4]

Common Beowulf clusters

There is no particular piece of software that defines a cluster as a Beowulf. Commonly used parallel processing libraries include MPI (Message Passing Interface) and PVM (Parallel Virtual Machine). Both of these permit the programmer to divide a task among a group of networked computers, and recollect the results of processing. It is a common misconception that any software will run faster on a Beowulf. The software must be re-written to take advantage of the cluster, and specifically have multiple non-dependent parallel computations involved in its execution.

References

- ↑ "Beowulf Project Overview" (Retreived 08-April-2007).

- ↑ "Mac OS X Beowulf Cluster Deployment Notes" (Retreived 08-April-2007).

- ↑ "A small Beowulf Cluster running FreeBSD" (Retreived 08-April-2007).

- ↑ 4.0 4.1 "The inside story of the Beowulf saga" (Retreived 11-April-2007).

- ↑ Template:Citeweb

- ↑ "Donald Becker's Bio at Beowulf.org" (Retreived 11-April-2007).

- ↑ "Beowulf History from beowulf.org" (Retreived 11-April-2007).

- ↑ Template:Citeweb