Byte: Difference between revisions

imported>Joshua David Williams (→Conflicting definitions: still working on it.. ;-)) |

imported>Greg Woodhouse m (EIC -> IEC) |

||

| Line 37: | Line 37: | ||

===Conflicting definitions=== | ===Conflicting definitions=== | ||

{{main|Binary prefix}} | {{main|Binary prefix}} | ||

Traditionally, the computer world has used a value of 1024 instead of 1000 when referring to a kilobyte. This was done because programmers needed a number compatible with the base of 2, and 1024 is equal to 2 to the 10th [[Exponentiation|power]]. Due to the large confusion between these two meanings, an effort has been made by the [[International Electrotechnical Commission]] ( | Traditionally, the computer world has used a value of 1024 instead of 1000 when referring to a kilobyte. This was done because programmers needed a number compatible with the base of 2, and 1024 is equal to 2 to the 10th [[Exponentiation|power]]. Due to the large confusion between these two meanings, an effort has been made by the [[International Electrotechnical Commission]] (IEC) to remedy this problem. They have standardized a new system called the '[[binary prefix]]', which replaces the word 'kilobyte' with 'ki'''bi'''byte', abbreviated as KiB. This solution has since been approved by the [[IEEE]] on a trial-use basis, and may prove to one day become a true standard.<ref>{{cite web | ||

| url=http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?tp=&isnumber=26611&arnumber=1186538&punumber=8450 | | url=http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?tp=&isnumber=26611&arnumber=1186538&punumber=8450 | ||

| title=IEEE Trial-Use Standard for Prefixes for Binary Multiples | | title=IEEE Trial-Use Standard for Prefixes for Binary Multiples | ||

Revision as of 20:47, 14 April 2007

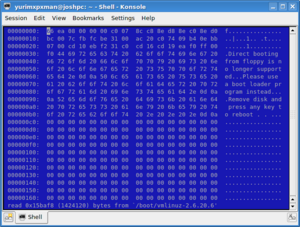

In computer science, a byte is a unit of data consisting of eight bits. When grouped together, bytes can contain the information to form a document, such as a photograph or a book. All data represented on a computer are composed of bytes, from e-mails and pictures, to programs and data stored on a hard drive. Although it may appear to be a simple concept at first glance, the actual definition is far more complex and deeper.

Technical definition

For example, in electronics, information is represented by the toggle of two states, usually referred to as 'on' and 'off'. To represent this state, computer scientists use the values of 0 (off) and 1 (on); we refer to this value as a bit. Half of a byte (four bits) is referred to as a nibble. A word is a standard number of bytes that memory is addressed with. Memory can only be addressed by multiples of the size of a word, and the size of a word is dependent on the architecture. For example: a 16-bit processor has words consisting of two bytes (8 x 2 = 16), a 32-bit processor has words that consist of four bytes (4 x 8 = 32), etc.

Each byte is made of eight bits, which can represent any number from 0 to 255. We obtain this number of possible values, which is 256 when including the 0, by raising the possible values of a bit (two) to the power of the length of a byte (eight); thus, 28 = 256 possible values in a byte.

Bytes can be used to represent a countless array of data types, from characters in a string of text, to the assembled and linked machine code of a binary executable file. Every file, sector of system memory, and network stream is composed of bytes.

Perhaps the oldest formation of bytes was plain text, that is, plain alphanumeric characters with no punctuation. To make up for the absence of basic punctuation, telegrams would often use the word "STOP" to represent a period. The actual value of each character has varied in years past. Today, however, we have the American Standard Code for Information Interchange (ASCII), which allows data to be readable when being transmitted through different mediums, such as from one operating system to another. For instance, a user who typed a plain text document in Linux will be able to read his file correctly on a Macintosh computer. One example of ASCII would be the capital letters of the English language, which range from 101 for "A" to 127 for "Z".

Endianness

Of course, since data almost always consist of more than one byte, these strings of numbers must be arranged in a certain fashion in order for a device to read it correctly. In computer science, we refer to this as endianness. Just as some human languages are written from left to right, such as English, while others are written from right to left, such as Hebrew, bytes are not always arranged in the same fashion.

Suppose we are writing a program that uses the number 1024. In this example, the number 1 is considered to be the most significant byte. If this byte is written first, that is, in the lowest memory sector, then we are using the 'Big Endian'. If this byte is written last, or in the highest memory sector, rather, then we are using the 'Little Endian'. This is typically not a problem when dealing with the local system memory since the endianness is determined by the processor's architecture. However, this can pose a problem in some instances, such as network streams. For this reason, a networking device must specify which format it is using before it sends any data. This ensures that the information is read correctly at the receiving end.

Word origin and ambiguity

Although the origin of the word 'byte' is unknown, it is believed to have been coined by Dr. Werner Buchholz of IBM in 1964. It is a play on the word 'bit', and originally referred to the number of bits used to represent a character.[1] This number is usually eight, but in some cases (especially in times past), it can be any number ranging from as few as 2 to as many as 128 bits. Thus, the word 'byte' is actually an ambiguous term. For this reason, an eight bit byte is sometimes referred to as an 'octet'.[2]

Sub-units

While basic, byte is not the most commonly used unit of data. Because files are normally many thousands or even billions of times larger than a byte, other terms designating larger byte quantities are used to increase readability. Metric prefixes are added to the word byte, such as kilo for one thousand bytes (kilobyte), mega for one million (megabyte), giga for one billion (gigabyte), and even tera, which is one trillion (terabyte). One thousand megabytes compose a terabyte, and even the largest consumer hard drives today are only three-fourths a terabyte (750 'gigs' or gigabytes). The rapid pace of technological advancement may make the terabyte commonplace in the future, however.

Conflicting definitions

Traditionally, the computer world has used a value of 1024 instead of 1000 when referring to a kilobyte. This was done because programmers needed a number compatible with the base of 2, and 1024 is equal to 2 to the 10th power. Due to the large confusion between these two meanings, an effort has been made by the International Electrotechnical Commission (IEC) to remedy this problem. They have standardized a new system called the 'binary prefix', which replaces the word 'kilobyte' with 'kibibyte', abbreviated as KiB. This solution has since been approved by the IEEE on a trial-use basis, and may prove to one day become a true standard.[3]

While the difference between 1000 and 1024 may seem trivial, one must note that as the size of a disk increases, so does the margin of error. The difference between 1TB and 1TiB, for instance, is approximately 10%. As hard drives become larger, the need for a distinction between these two prefixes will grow. This has been a problem for hard disk drive manufacturers in particular. For example, one well known disk manufacturer, Western Digital, has recently been taken to court for their use of the base of 10 when labeling the capacity of their drives. This is a problem because labeling a hard drive's capacity with the base of 10 implies a greater storage capacity when the consumer may assume it refers to the base of 2. [4]

Table of prefixes

| Metric (abbr.) | Value | Binary (abbr.) | Value | Difference* | Difference in bytes |

|---|---|---|---|---|---|

| byte (B) | 100 = 10000 | byte (B) | 20 = 10240 | 0 | |

| kilobyte (KB) | 103 = 10001 | kibibyte (KiB) | 210 = 10241 | 24 | |

| megabyte (MB) | 106 = 10002 | mebibyte (MiB) | 220 = 10242 | 48,576 | |

| gigabyte (GB) | 109 = 10003 | gibibyte (GiB) | 230 = 10243 | 73,741,824 | |

| terabyte (TB) | 1012 = 10004 | tebibyte (TiB) | 240 = 10244 | 99,511,627,776 | |

| petabyte (PB) | 1015 = 10005 | pebibyte (PiB) | 250 = 10245 | 125,899,906,842,624 | |

| exabyte (EB) | 1018 = 10006 | exbibyte (EiB) | 260 = 10246 | 152,921,504,606,846,976 | |

| zettabyte (ZB) | 1021 = 10007 | zebibyte (ZiB) | 270 = 10247 | 180,591,620,717,411,303,424 | |

| yottabyte (YB) | 1024 = 10008 | yobibyte (YiB) | 280 = 10248 | 208,925,819,614,629,174,706,176 |

*Increase, rounded to the nearest tenth

Related topics

- Binary numeral system

- American Standard Code for Information Interchange (ASCII)

- Extended Binary Coded Decimal Interchange Code (EBCDIC)

- Unicode Character Encoding

References

- ↑ Dave Wilton (2006-04-8). Wordorigins.org; bit/byte.

- ↑ Bob Bemer (Accessed April 12th, 2007). Origins of the Term "BYTE".

- ↑ IEEE Trial-Use Standard for Prefixes for Binary Multiples (Accessed April 14th, 2007).

- ↑ Nate Mook (2006-06-28). Western Digital Settles Capacity Suit.