Byte: Difference between revisions

imported>Joshua David Williams (→Conflicting definitions: expounded upon the difference between KB and KiB) |

imported>Joshua David Williams (added Hexer image) |

||

| Line 1: | Line 1: | ||

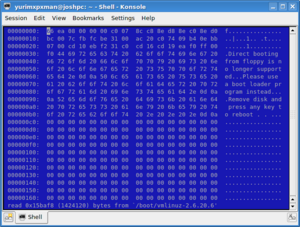

[[Image:Hexer.png|300px|right|thumb|The [[Hexer]] binary editor, displaying the [[Linux kernel]] version 2.6.20.6; this image illustrates the value of the bytes composing a program]] | |||

In [[computer science]], a '''byte''' is a unit of [[data]] consisting of eight [[bit]]s. When grouped together, bytes can contain the information to form a document, such as photograph or a book. All data represented on a computer are composed of bytes, from e-mails and pictures, to programs and data stored on a [[hard drive]]. Although it may appear to be a simple concept at first glance, there is much information that goes to a deeper level than the byte. | In [[computer science]], a '''byte''' is a unit of [[data]] consisting of eight [[bit]]s. When grouped together, bytes can contain the information to form a document, such as photograph or a book. All data represented on a computer are composed of bytes, from e-mails and pictures, to programs and data stored on a [[hard drive]]. Although it may appear to be a simple concept at first glance, there is much information that goes to a deeper level than the byte. | ||

Revision as of 16:30, 12 April 2007

In computer science, a byte is a unit of data consisting of eight bits. When grouped together, bytes can contain the information to form a document, such as photograph or a book. All data represented on a computer are composed of bytes, from e-mails and pictures, to programs and data stored on a hard drive. Although it may appear to be a simple concept at first glance, there is much information that goes to a deeper level than the byte.

Technical definition

For example, in electronics, information is determined by the toggle of two states, usually referred to as 'on' or 'off'. To represent this state, computer scientists use the values of 0 (off) and 1 (on); we refer to this value as a bit.

Each byte is made of eight bits, and can represent any number from 0 to 255. We obtain this number of possible values, which is 256 when including the 0, by raising the possible values of a bit (two) to the power of the length of a byte (eight); thus, 28 = 256 possible values in a byte.

Bytes can be used to represent a countless array of data types, from characters in a string of text, to the contents of a binary executable file. Every file is composed of bytes.

Ambiguity

The term 'byte' is sometimes ambiguous because in some situations it is used to refer to a number of bits other than eight. In years past, it has been used to refer to a length of anywhere between six and nine bits. For this reason, some choose to use the term 'octet' when referring specifically to a set of eight bytes.

Sub-units

While basic, byte is not the most commonly used unit of data. Because files are normally many thousands or even billions of times larger than a byte, other terms are used to increase readability. Metric prefixes are added to the word byte, such as kilo for one thousand bytes (kilobyte), mega for one million (megabyte), giga for one billion (gigabyte), and even tera, which is one trillion (terabyte). One thousand megabytes composes a terabyte, and even the largest consumer hard drives today are only three-fourths a terabyte (750 'gigs' or gigabytes). The rapid pace of technology may make the terabyte a common apperance in the future, however.

Conflicting definitions

Traditionally, the computer world has used a value of 1024 instead of 1000 when referring to a kilobyte. The reason for this is that the programmers needed a number compatible with the base of 2, and 1024 is equal to 2 to the 10th power. This, however, is now non-standard; it has recently been replaced with the term 'kibibyte', abbreviated as KiB; this standard is known as the 'binary prefix'.

While the difference between 1000 and 1024 may seem trivial, one must note that as the size of a disk increases, so does the margin of error. The difference between 1TB and 1TiB, for instance, is approximately 1.1%. As hard drives become larger, the need for a distinction between these two prefixes will be a necessity. This has been a problem for hard disk drive manufacturers in particular. For example, one well known disk manufacturer, Western Digital, has recently been taken to court for their use of the base of 1000 when labeling the capacity of their drives.[1]

Table of prefixes

| SI Prefixes (abbreviation) | Value | Binary Prefixes (abbreviation) | Value |

|---|---|---|---|

| kilobyte (KB) | 103 | kibibyte (KiB) | 210 |

| megabyte (MB) | 106 | mebibyte (MiB) | 220 |

| gigabyte (GB) | 109 | gibibyte (GiB) | 230 |

| terabyte (TB) | 1012 | tebibyte (TiB) | 240 |

| petabyte (PB) | 1015 | pebibyte (PiB) | 250 |

| exabyte (EB) | 1018 | exbibyte (EiB) | 260 |

| zettabyte (ZB) | 1021 | zebibyte (ZiB) | 270 |

| yottabyte (YB) | 1024 | yobibyte (YiB) | 280 |

Subtopics

- Half of a byte (four bits) is referred to as a nibble.

- The word is a standard number of bytes that memory is addressed with. Memory can only be addressed by multiples of the size of a word, and the size of a word is dependent on the architecture.

For example: a 16-bit processor has words consisting of two bytes (8 x 2 = 16), a 32-bit processor has words that consist of four bytes (4 x 8 = 32), etc.

- ↑ Nate Mook (2006-06-28). Western Digital Settles Capacity Suit.